Our work speaks for itself.An Analyst’s Perspective on the Future of Fog Computing

Project GRFF

Three Layer FabricOrchestrates compute across edge, fog, and cloud so work happens where it is fastest and most efficient.

01

Real Time ResilienceDesigned for autonomy when links fail, keeping essential local services running through disruption.

02

Quantified Upside, Global Horizon

Models project up to 90% lower tail latency and 26 to 37% lower five year TCO, with a path toward a space air ground integrated fabric.

03

Anticipating the Limits of the Centralized Cloud

-

For applications like autonomous systems and real-time public safety alerts, the round-trip delay to a distant data center will become a critical bottleneck.

-

The sheer volume of data from IoT devices will make backhauling everything to the cloud economically unfeasible for many organizations.

-

A continued reliance on a stable, centralized connection creates a single point of failure that is at odds with the need for mission-critical resilience.

My analysis begins with the current state of cloud computing. While powerful, the traditional centralized model shows clear signs of strain when confronted with the exponential growth of data and the increasing demand for real-time applications. Based on current trends, I project that the key challenges will be:

My analysis sought to answer a fundamental question:

What architectural model could address these projected limitations and provide a more robust foundation for the next decade of technology?

The Hypothesis: A Three-Layer Fog Computing Architecture

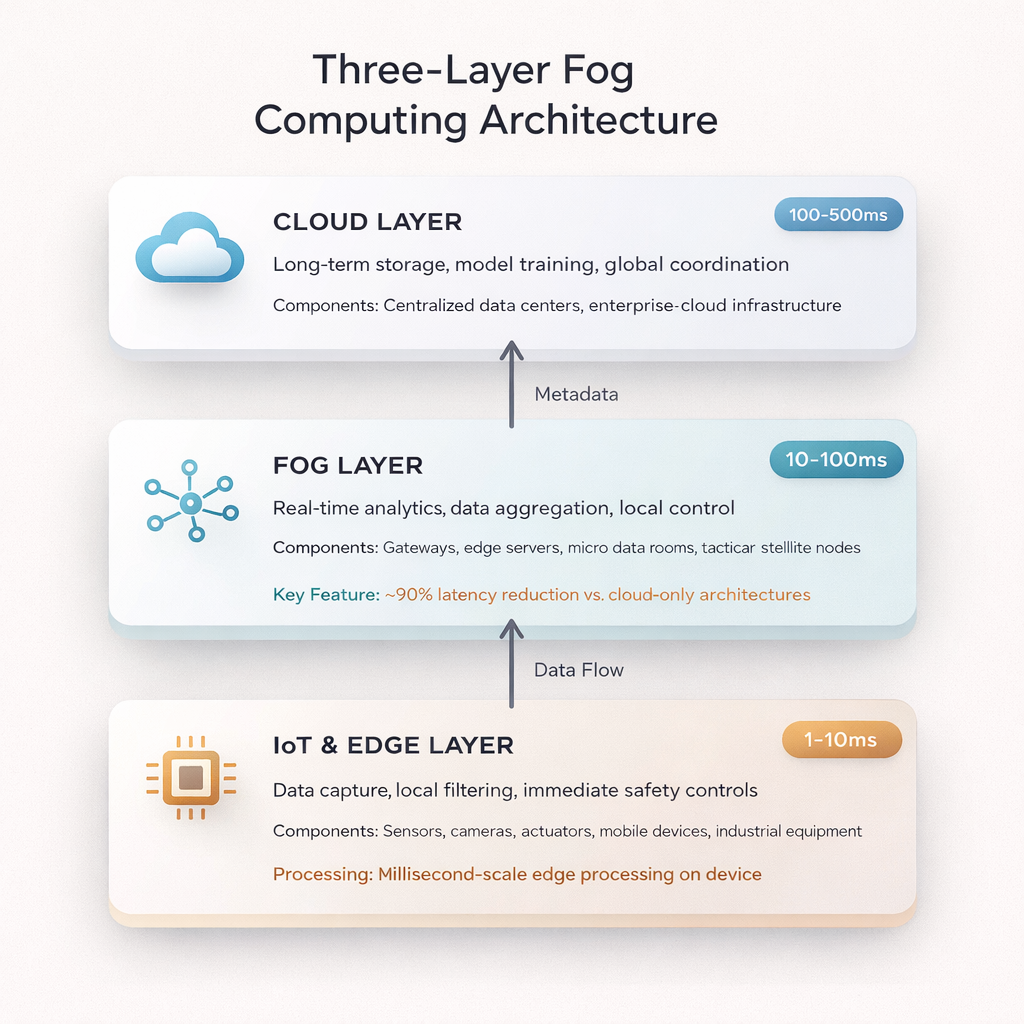

I hypothesize that the solution lies in a hierarchical, three-layer architecture that strategically distributes computation. This model, known as Fog Computing, would serve as a critical intermediary between the network edge and the centralized cloud.

The IoT & Edge Layer:

My model assumes that data sources (sensors, cameras, etc.) will perform initial, simple processing to reduce noise and handle immediate actions (projected latency: 1-10 ms).

The Fog Layer:

I propose a distributed network of fog nodes as a powerful middle tier. My analysis suggests this layer would be responsible for real-time analytics and could operate autonomously, ensuring service continuity (projected latency: 10-100 ms).

The Cloud Layer:

The cloud would then be used for what it does best: heavy, non-time-sensitive computation like long-term storage, complex model training, and global coordination (projected latency: 100-500 ms).

This proposed architecture would allow computation to happen at the most logical and efficient location.

The Edge and Fog Layers in Action

Edge Data Center

The Edge and Fog layers are composed of ruggedized servers, gateways, and smart sensors. Instead of a massive, centralized data center, think of smaller, distributed “micro data centers” that live closer to where data is generated.A self-contained,ruggedized rack that can be deployed in a factory, a retail store, or at the base of a cell tower.

Smart City Sensors

These devices, mounted on lamp posts, collect real-time data on traffic, parking, air quality, and more.

These components form the backbone of a smart city, enabling applications like intelligent traffic management, public safety alerts, and efficient resource allocation.

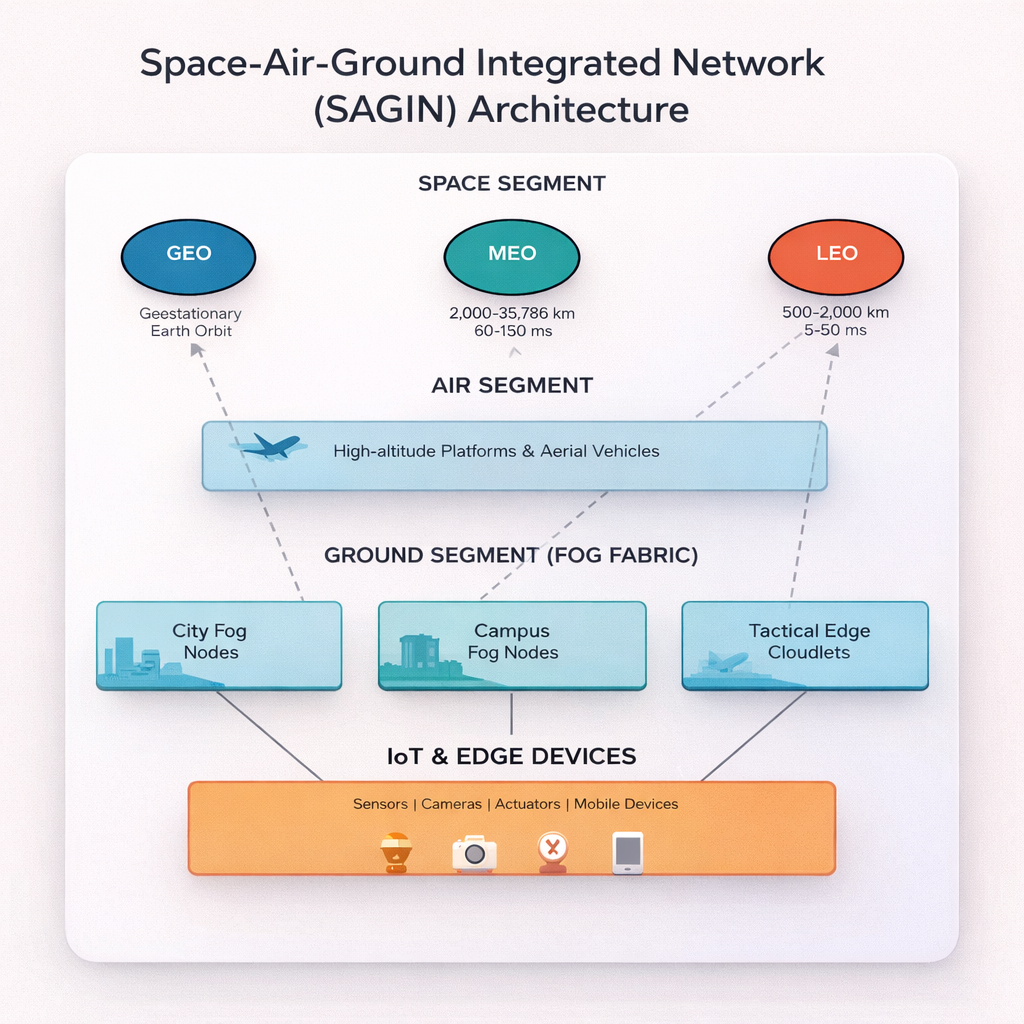

The Space Layer: A Network Above the Clouds

The most ambitious part of my hypothesis is the integration of a space-based fog layer. This involves a constellation of Low Earth Orbit (LEO) satellites that act as data relays and processing nodes, creating a truly global network.

LEO Satellite Constellation

Thousands of small satellites work together to provide low-latency internet and data services to the entire globe.

Ground Station

These are the critical link between the terrestrial internet and the satellite network, sending and receiving data.

Key Analytical Projections & Hypothesized Gains

My analysis projects significant, quantifiable benefits for this new architectural model.

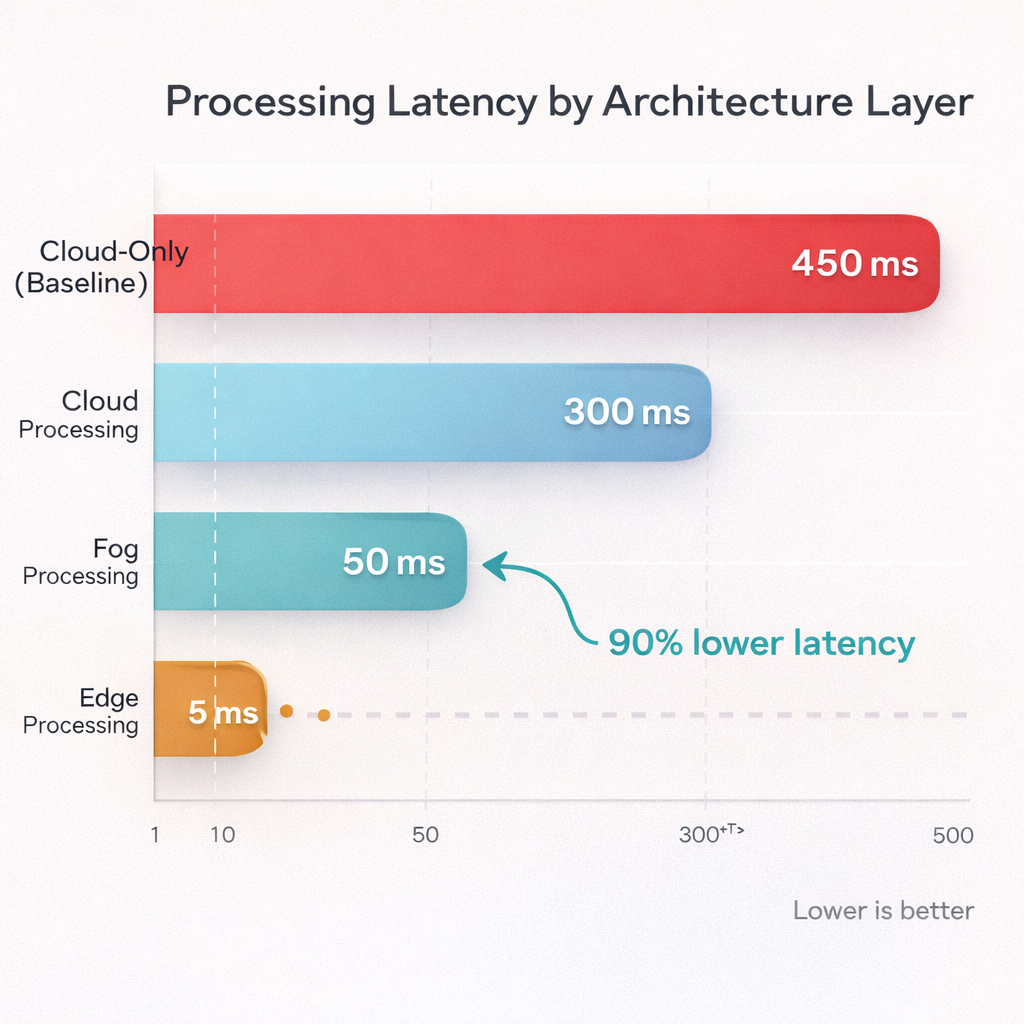

1.Projected Latency Reduction

By processing data closer to its source, the fog layer could dramatically reduce response times. My models suggest that a hybrid fog architecture could achieve up to a 90% reduction in 95th percentile latency compared to cloud-only systems. This would enable a new class of real-time applications that are currently infeasible.

2.Hypothesized Resilience and Autonomy

I hypothesize that the GRFF would be inherently more resilient. In the event of a network failure, fog nodes could maintain essential local services. My availability models predict that this architecture could maintain 99.5% service availability even with significant network disruptions.

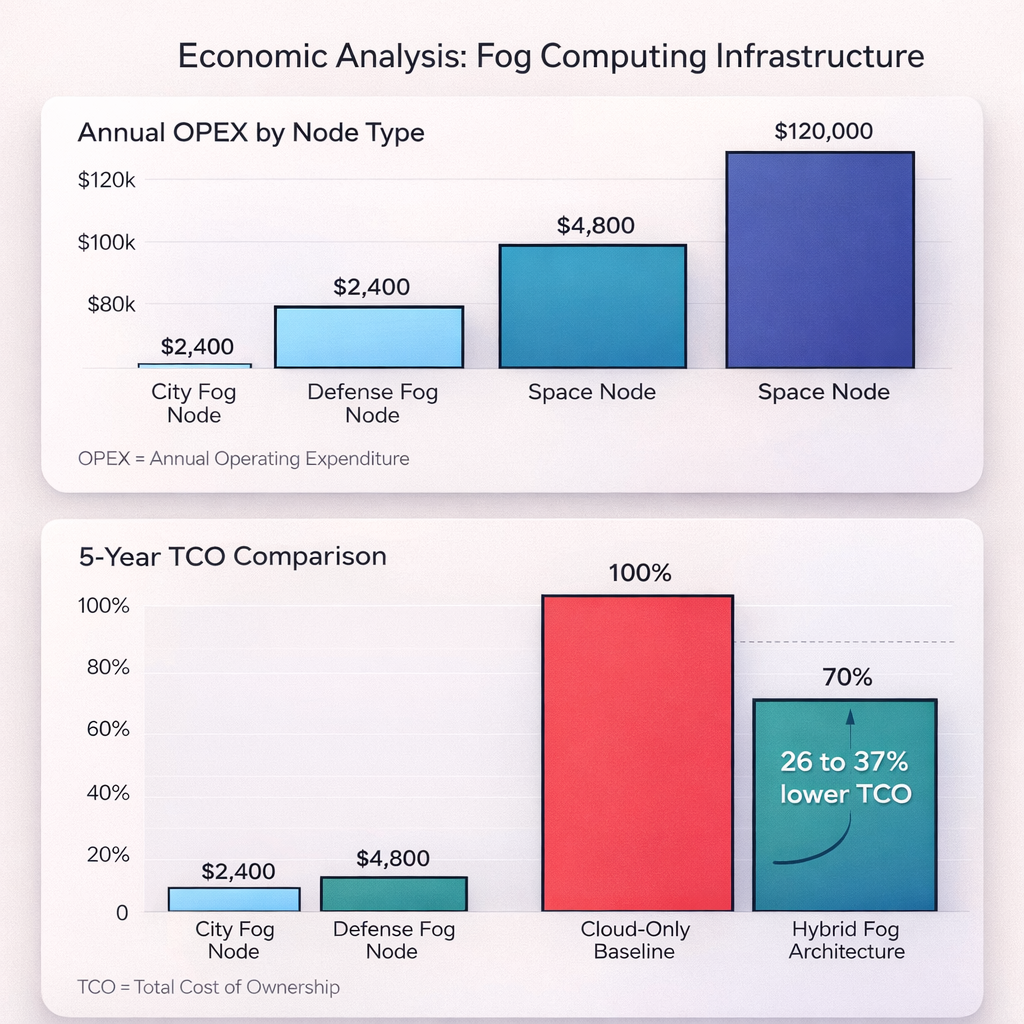

3. Projected Economic Advantages

My analysis indicates a strong economic case for this model. By processing data locally, fog nodes would drastically reduce the volume of data sent to the cloud. My economic models project a 26-37% reduction in 5-year Total Cost of Ownership (TCO) for a hybrid fog architecture compared to a cloud-only baseline.

The Architectural Vision: A Space-Air-Ground Integrated Network (SAGIN)

Extending this analysis, my ultimate vision for the GRFF is a unified fabric that seamlessly integrates terrestrial networks with non-terrestrial assets. This Space-Air-Ground Integrated Network (SAGIN) would create a truly global computing continuum.

Learn More

-

To further explore these topics, I’ve compiled a list of excellent videos that break down the concepts of fog computing and showcase smart city implementations.

-

-

-

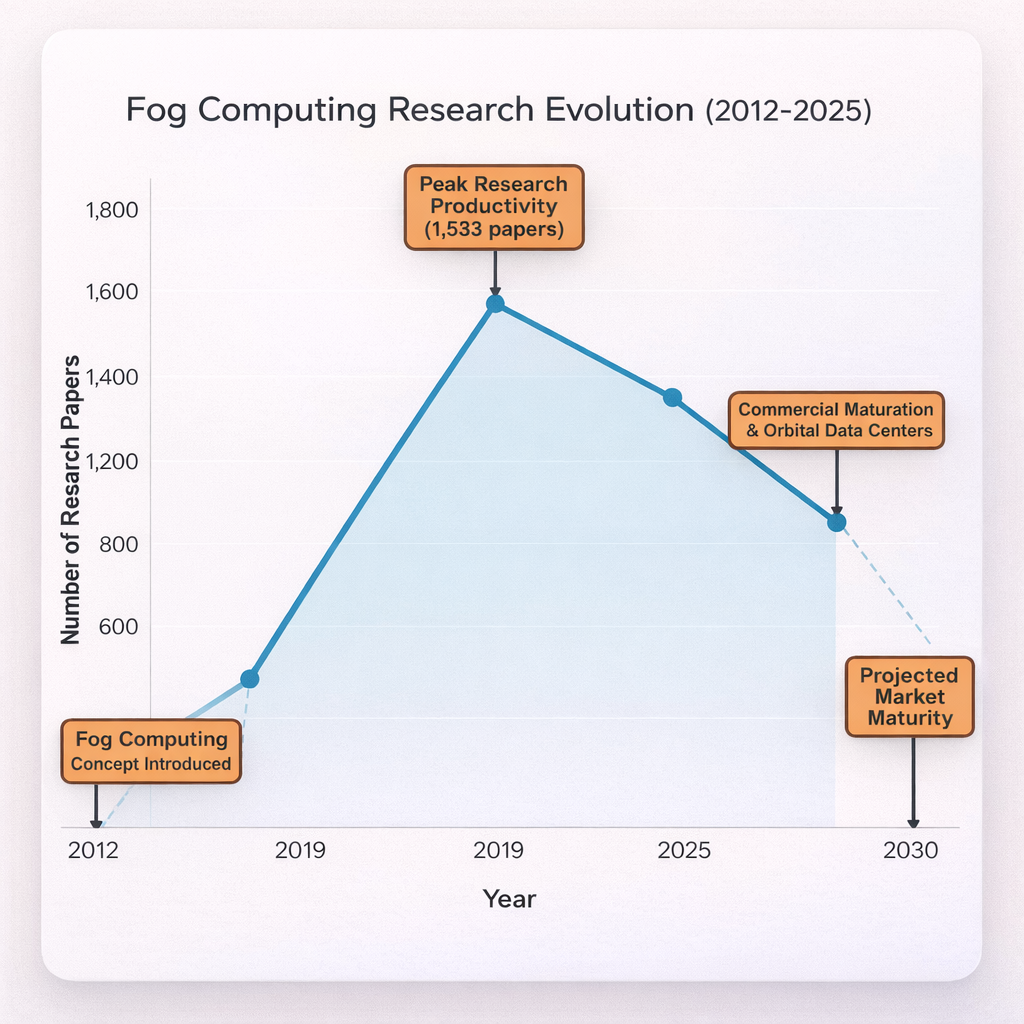

A Timeline of Innovation

The Basis for My Analysis

The concept of Fog Computing has evolved rapidly. My analysis is grounded in this technological progression, which indicates a clear trend toward commercial viability and implementation.

Conclusion:

An Analyst's View of the Future

My analysis suggests that the Global Resilient Fog Fabric represents a logical and necessary paradigm shift in distributed systems. I have spent five years studying these trends, and I hypothesize that by intelligently orchestrating computation across a hybrid of edge, fog, and cloud resources, we can build systems that are faster, more resilient, and more costeffective.The principles and models outlined here are my data-driven projection of a robust blueprint for the next generation of smart infrastructure. The logical next step from this analysis would be to move from hypothesis to implementation, focusing on building a commercial-grade orchestration platform for the GRFF and deploying pilot programs to validate these projections in the real world.

Selected Foundational Research

The following sources formed the basis of my analysis and are provided for context.

1. Costa, B., et al. (2022). Orchestration of Fog Computing services: A systematic and

comprehensive review. Journal of Parallel and Distributed Computing.

2. National Institute of Standards and Technology (NIST). (2018). NIST Special

Publication 500-325: Fog Computing Conceptual Model.

3. IETF RFC 9171 (2022). Bundle Protocol Version 7.

4. 3GPP Release 17 (2022). Support for Non-Terrestrial Networks (NTN).

5. Mordor Intelligence. (2025). Fog Computing Market Size & Share Analysis - Growth

Trends & Forecasts (2025 - 2030).